All Categories

Featured

Table of Contents

- – About Best Online Machine Learning Courses And...

- – The Single Strategy To Use For How Long Does I...

- – The smart Trick of Machine Learning Crash Cou...

- – 3 Easy Facts About Machine Learning In Produc...

- – The smart Trick of Machine Learning Is Still...

- – 9 Easy Facts About Top Machine Learning Care...

- – The 45-Second Trick For How To Become A Mach...

Some people think that that's disloyalty. Well, that's my whole profession. If someone else did it, I'm mosting likely to use what that individual did. The lesson is putting that apart. I'm requiring myself to assume through the feasible options. It's more concerning eating the web content and trying to use those ideas and much less regarding finding a library that does the job or finding someone else that coded it.

Dig a little bit deeper in the math at the start, simply so I can construct that foundation. Santiago: Finally, lesson number seven. I do not think that you have to comprehend the nuts and bolts of every formula prior to you use it.

I have actually been utilizing neural networks for the longest time. I do have a feeling of exactly how the gradient descent functions. I can not discuss it to you today. I would certainly have to go and check back to in fact obtain a far better instinct. That does not imply that I can not fix points making use of neural networks? (29:05) Santiago: Trying to force individuals to believe "Well, you're not going to succeed unless you can clarify every single detail of how this works." It returns to our sorting example I assume that's simply bullshit advice.

As a designer, I've dealt with many, many systems and I've utilized lots of, numerous things that I do not recognize the nuts and screws of exactly how it functions, even though I understand the influence that they have. That's the last lesson on that thread. Alexey: The funny thing is when I consider all these libraries like Scikit-Learn the formulas they make use of inside to apply, as an example, logistic regression or another thing, are not the exact same as the formulas we study in machine learning courses.

About Best Online Machine Learning Courses And Programs

Also if we attempted to discover to get all these fundamentals of machine learning, at the end, the formulas that these collections use are various. Santiago: Yeah, definitely. I believe we require a whole lot more pragmatism in the market.

Incidentally, there are 2 different paths. I generally speak with those that intend to operate in the industry that wish to have their influence there. There is a path for researchers and that is entirely various. I do not dare to mention that due to the fact that I don't recognize.

Right there outside, in the sector, materialism goes a lengthy way for sure. Santiago: There you go, yeah. Alexey: It is an excellent motivational speech.

The Single Strategy To Use For How Long Does It Take To Learn “Machine Learning” From A ...

One of the points I wanted to ask you. First, allow's cover a pair of things. Alexey: Allow's start with core devices and structures that you require to learn to in fact transition.

I recognize Java. I know SQL. I understand exactly how to use Git. I recognize Bash. Maybe I recognize Docker. All these things. And I become aware of artificial intelligence, it seems like an awesome thing. So, what are the core tools and structures? Yes, I watched this video clip and I obtain persuaded that I don't need to get deep right into math.

What are the core tools and frameworks that I require to discover to do this? (33:10) Santiago: Yeah, definitely. Wonderful question. I believe, primary, you need to begin discovering a little bit of Python. Because you currently understand Java, I don't think it's mosting likely to be a massive shift for you.

Not because Python coincides as Java, however in a week, you're gon na obtain a great deal of the distinctions there. You're gon na have the ability to make some development. That's leading. (33:47) Santiago: After that you get specific core devices that are mosting likely to be utilized throughout your whole job.

The smart Trick of Machine Learning Crash Course That Nobody is Talking About

That's a library on Pandas for data adjustment. And Matplotlib and Seaborn and Plotly. Those 3, or one of those 3, for charting and presenting graphics. Then you obtain SciKit Learn for the collection of machine understanding algorithms. Those are tools that you're mosting likely to have to be making use of. I do not advise just going and learning more about them out of the blue.

Take one of those training courses that are going to start presenting you to some issues and to some core ideas of maker learning. I don't remember the name, yet if you go to Kaggle, they have tutorials there for totally free.

What's good about it is that the only demand for you is to understand Python. They're mosting likely to offer an issue and inform you how to utilize choice trees to address that specific issue. I believe that process is exceptionally effective, due to the fact that you go from no maker discovering background, to recognizing what the issue is and why you can not resolve it with what you recognize right currently, which is straight software application engineering methods.

3 Easy Facts About Machine Learning In Production Explained

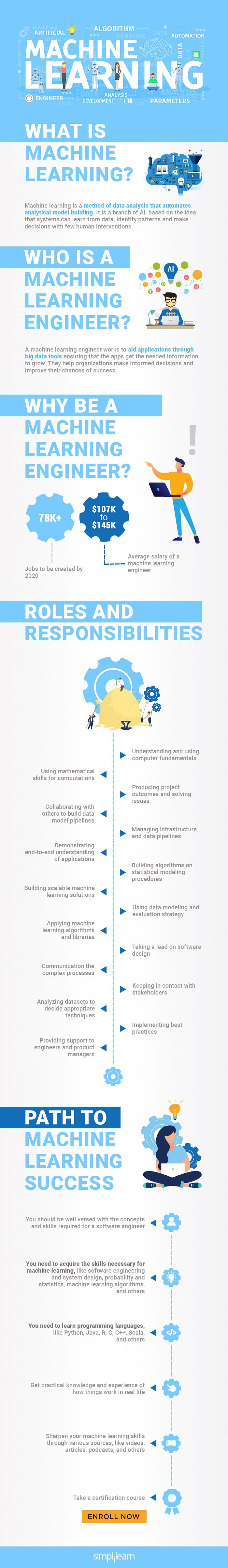

On the various other hand, ML designers concentrate on structure and releasing artificial intelligence models. They concentrate on training versions with data to make forecasts or automate jobs. While there is overlap, AI designers handle even more diverse AI applications, while ML engineers have a narrower emphasis on equipment learning algorithms and their useful application.

Artificial intelligence engineers concentrate on establishing and deploying equipment understanding designs into manufacturing systems. They deal with design, ensuring models are scalable, reliable, and integrated right into applications. On the other hand, data researchers have a wider function that includes data collection, cleaning, exploration, and structure models. They are usually in charge of extracting understandings and making data-driven decisions.

As companies increasingly embrace AI and device knowing innovations, the demand for knowledgeable experts expands. Machine knowing engineers function on cutting-edge projects, contribute to development, and have affordable incomes.

ML is basically various from conventional software advancement as it focuses on training computers to gain from data, as opposed to programs explicit regulations that are performed systematically. Unpredictability of outcomes: You are possibly utilized to writing code with predictable outcomes, whether your feature runs once or a thousand times. In ML, nevertheless, the outcomes are much less certain.

Pre-training and fine-tuning: Just how these versions are educated on large datasets and after that fine-tuned for particular tasks. Applications of LLMs: Such as text generation, belief evaluation and information search and access. Papers like "Focus is All You Required" by Vaswani et al., which presented transformers. Online tutorials and courses concentrating on NLP and transformers, such as the Hugging Face course on transformers.

The smart Trick of Machine Learning Is Still Too Hard For Software Engineers That Nobody is Talking About

The capability to take care of codebases, merge modifications, and solve disputes is just as essential in ML advancement as it is in standard software projects. The abilities established in debugging and screening software application applications are extremely transferable. While the context might change from debugging application logic to identifying problems in data processing or design training the underlying concepts of methodical investigation, hypothesis screening, and iterative refinement are the same.

Artificial intelligence, at its core, is greatly reliant on statistics and possibility concept. These are crucial for comprehending exactly how formulas gain from information, make predictions, and examine their efficiency. You should take into consideration becoming comfy with concepts like statistical importance, distributions, hypothesis testing, and Bayesian thinking in order to style and analyze models effectively.

For those thinking about LLMs, a thorough understanding of deep knowing architectures is beneficial. This consists of not only the technicians of neural networks however likewise the architecture of certain designs for different use situations, like CNNs (Convolutional Neural Networks) for photo processing and RNNs (Recurring Neural Networks) and transformers for sequential data and natural language processing.

You ought to understand these problems and discover methods for determining, minimizing, and communicating about bias in ML designs. This consists of the prospective impact of automated choices and the moral effects. Many models, particularly LLMs, call for significant computational resources that are typically given by cloud platforms like AWS, Google Cloud, and Azure.

Building these abilities will not just assist in a successful transition right into ML yet likewise ensure that programmers can add successfully and properly to the improvement of this vibrant area. Theory is essential, yet absolutely nothing defeats hands-on experience. Begin functioning on tasks that enable you to use what you have actually learned in a practical context.

Take part in competitions: Join systems like Kaggle to take part in NLP competitions. Develop your projects: Begin with straightforward applications, such as a chatbot or a message summarization device, and slowly enhance intricacy. The area of ML and LLMs is rapidly advancing, with new advancements and innovations emerging regularly. Remaining updated with the current research study and fads is crucial.

9 Easy Facts About Top Machine Learning Careers For 2025 Explained

Sign up with areas and online forums, such as Reddit's r/MachineLearning or area Slack channels, to go over ideas and obtain recommendations. Attend workshops, meetups, and seminars to link with other professionals in the field. Add to open-source tasks or create blog site articles regarding your discovering journey and projects. As you acquire knowledge, begin seeking possibilities to incorporate ML and LLMs right into your job, or seek brand-new functions focused on these modern technologies.

Vectors, matrices, and their role in ML formulas. Terms like version, dataset, attributes, labels, training, inference, and recognition. Data collection, preprocessing strategies, model training, examination procedures, and deployment considerations.

Decision Trees and Random Woodlands: Intuitive and interpretable designs. Support Vector Machines: Optimum margin category. Matching issue types with suitable models. Balancing efficiency and complexity. Standard framework of neural networks: neurons, layers, activation features. Layered calculation and ahead proliferation. Feedforward Networks, Convolutional Neural Networks (CNNs), Persistent Neural Networks (RNNs). Image acknowledgment, series forecast, and time-series analysis.

Information flow, makeover, and feature engineering methods. Scalability concepts and efficiency optimization. API-driven approaches and microservices integration. Latency management, scalability, and variation control. Constant Integration/Continuous Deployment (CI/CD) for ML operations. Version monitoring, versioning, and performance tracking. Identifying and dealing with adjustments in design efficiency with time. Resolving performance bottlenecks and resource administration.

The 45-Second Trick For How To Become A Machine Learning Engineer & Get Hired ...

Training course OverviewMachine understanding is the future for the following generation of software program specialists. This course serves as an overview to artificial intelligence for software application designers. You'll be presented to 3 of one of the most appropriate parts of the AI/ML self-control; managed learning, semantic networks, and deep learning. You'll understand the distinctions between standard programs and machine knowing by hands-on development in supervised understanding prior to developing out intricate distributed applications with semantic networks.

This training course serves as a guide to device lear ... Program Extra.

Table of Contents

- – About Best Online Machine Learning Courses And...

- – The Single Strategy To Use For How Long Does I...

- – The smart Trick of Machine Learning Crash Cou...

- – 3 Easy Facts About Machine Learning In Produc...

- – The smart Trick of Machine Learning Is Still...

- – 9 Easy Facts About Top Machine Learning Care...

- – The 45-Second Trick For How To Become A Mach...

Latest Posts

How To Crack Faang Interviews – A Step-by-step Guide

The Best Open-source Resources For Data Engineering Interview Preparation

How To Crack The Front-end Developer Interview – Tips For Busy Engineers

More

Latest Posts

How To Crack Faang Interviews – A Step-by-step Guide

The Best Open-source Resources For Data Engineering Interview Preparation

How To Crack The Front-end Developer Interview – Tips For Busy Engineers